Description

As of FY23, Combined Jewish Philanthropies (CJP) requires grantees and partner organizations to survey participants on their satisfaction with the organization, on at least an annual basis.1

Requirements

You will be asked to provide the following information in an online form that CJP will provide you:

- Description of your organization or program/event including intended outcomes

- Number of unique participants or individuals your organization or program/event has reached

- Data showing your organization or program/event Net Promoter Score (NPS) and general participant satisfaction

- Details about the survey methodology including total sample size, number of completed responses, how the survey was administered, and dates the survey was active.

In order to measure participant satisfaction consistently across organizations in our community, CJP requires grantees to create and administer a brief survey to help us understand the extent to which members of the community are satisfied with the programs and events they have attended (virtually or in-person). Organizations are required to survey participants at least annually. For some organizations, this means that they will be required to collect contact information (e.g. email address) of participants that have engaged with their organization for the purpose of the survey. Satisfaction questions may be asked in a standalone survey or as part of a broader survey of participants designed to assess your program or organizational goals; however, reporting requirements apply to the satisfaction questions only. If your organization already administers a survey that collects this information, you may be able to utilize your existing questionnaire as along as questions measure similar construct of satisfaction as described above.

How this helps your organization

Routinely collecting participant satisfaction is an easy and valuable way for your organization to:

- Enhance participant retention and engagement: Participants who are more satisfied with their engagement with an organization are more likely to attend future programs or events with that organization, which may lead to increased retention and engagement.2

- Strengthen relationships and community partnerships: Satisfied participants are more likely to speak positively about your organization and recommend it to their family and friends, which fosters stronger relationships within the community.

- Demonstrate accountability and transparency: Actively seeking feedback and making data-driven improvements demonstrates a willingness to listen to your participants and meet their needs effectively.

By adhering to the survey requirements set by CJP and utilizing the provided satisfaction measures, you can consistently and comprehensively assess participant satisfaction. CJP is available to support you throughout the survey creation and collection process, ensuring you gather meaningful data and understand its implications for your organization.

Resources

CJP has brought together several measures of satisfaction that have been tested and validated below for you to use in your survey. These include a Net Promoter Score (NPS), Customer Satisfaction (CSAT), and American Consumer Satisfaction Index (ACSI).3

- NPS: A single question that is intended to measure customer loyalty— the likelihood that an individual loves your program or service and would recommend it to family and friends—based on a scale between 0 to 10 consisting of Detractors (0-6), Passives (7-8), and Promoters (9- 10).4

- CSAT: An index of questions designed to measure overall satisfaction, likelihood of engaging again, and likelihood of recommended.

- ACSI: An index which measures overall satisfaction and perceived quality of an experience against an expected ideal to determine satisfaction and loyalty.

Whereas NPS is used to measure the overall relationship the participant has with an organization, Customer Satisfaction (CSAT) measures participant satisfaction with regards to a program or service.5 For the purpose of measuring different dimensions of participant satisfaction, CJP requires at minimum that NPS is paired with a question of overall satisfaction.

You are welcome to include additional questions and you may alter the language slightly to work with your program or event. You may also find it valuable to ask open-ended question(s) about why participants feel the way they do.

| Metrics: This is what you will measure to determine participant satisfaction |

Survey question bank: Use questions from here to capture participant satisfaction. Variations in question wording or type may be adapted to different audiences or survey modes if they reflect the same underlying construct of satisfaction |

| Required questions | |

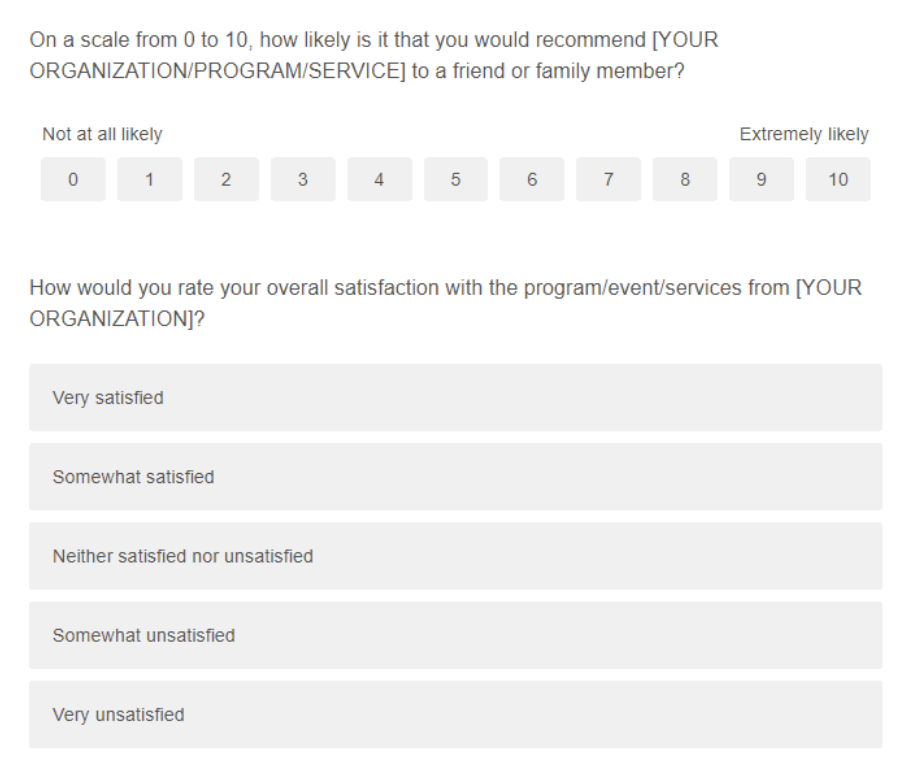

| Recommend (NPS) | On a scale from 0 to 10, how likely is it that you would recommend [YOUR ORGANIZATION/PROGRAM/SERVICE] to a friend or family member? (NPS = % promoters (9-10) - % detractors (0-6)) |

| Overall satisfaction (CSAT) | How would you rate your overall satisfaction with the program/event/services from [YOUR ORGANIZATION]? 1 Very satisfied; 2 Somewhat satisfied; 3 Neither satisfied nor unsatisfied; 4 Somewhat unsatisfied; 5 Very unsatisfied |

| Optional questions | |

| Repeat participation (CSAT) | Based on your most recent experience with [ORGANIZATION/PROGRAM NAME], how likely are you to attend/participate in a program with us again? 1 Extremely likely; 2 Very likely; 3 Somewhat likely; 4 Not very likely; 5 Not at all likely |

| Repeat participation (CSAT) | Based on your most recent experience with [ORGANIZATION/PROGRAM NAME], would you recommend our program/organization to a friend or family member? 1 Definitely would; 2 Probably would; 3 Not sure; 4 Probably would not; 5 Definitely would not |

| Overall satisfaction as of today (ACSI) | Using a 10-point scale on which “1” means “Very dissatisfied” and “10” means “Very satisfied,” how satisfied are you with [YOUR ORGANIZATIONS]’s program/ event/ services? |

| Satisfaction compared to respondent’s expectations (ACSI) | Now please rate the extent to which the program/event/services offered by [YOUR ORGANIZATION] have fallen short of or exceeded your expectations. Please use a 10-point scale on which “1” now means “Falls short of your expectations” and “10” means “Exceeds Your expectations.” |

Available survey tools and recommendations for use

Use the table below to help identify the recommended survey platform for your survey. For more complex survey needs, users may wish to use a subscription-based platform; however, several free options are available and may be utilized for the purpose described herein.

| Platform | Price | Features/Best use |

| Microsoft Forms | Free/Paid option | Easy to use, good if you use MS Office, basic question types, skip logic, and reporting features. |

| Google Forms | Free/Paid option | Easy to use, good if you use Suite, basic question types, skip logic, and reporting feature. |

| HubSpot Forms | Free for subscribers | Good if you already use HubSpot but limited survey feature. Better to integrate Survey Monkey |

| Survey Monkey | Free (limited features)/Paid (full feature) | Advanced survey needs (branching logic, piped text), track email responses, survey templates, web reports and analysis, data export |

| Qualtrics | Free (limited features)/Paid (full feature) | Advanced survey needs (branching logic, piped text), track email responses, survey templates, web reports and analysis, data export |

Additional tips for assessing participant satisfaction:

- Organizations that facilitate multiple programs or events throughout the year are encouraged (but not required) to survey participants at the conclusion of each program. These surveys should be administered as soon as the program concludes to achieve a high response rate.

- CJP staff is available to support you with creating your survey questionnaire and helping you understand the data you collect with it. If you need access to a survey platform or have any additional questions or needs, please contact Daniel Parmer, Associate Director, Research and Evaluation at DanielP@cjp.org.

Example survey reporting and questionnaire:

Survey reporting: (Example answers mapped to requirements listed above.)

- Description of your organization or program/event including intended outcomes: Our organization engages Jewish young adults (ages 18-35) in local social justice activities through participation in training and education seminars and volunteerism through a Jewish lens. These programs seek to increase the proportion of Jewish young adults that view service as a core component of their Jewish identity and to increase the feelings of connection to their Jewish peers and the Jewish communities where they live.

- Number of unique participants or individuals your organization or program/event has reached: Between July 1 to June 30 (FY22), our organization reached a total of 1,250 unique participants who attended at least one event.

- Data showing participant satisfaction: The majority of participants (73%) were satisfied with the program(s) they attended including 21% who were “very satisfied” and 52% that were “somewhat satisfied.” Younger participants (18-24) and those still in college reported higher levels of satisfaction compared to participants ages 35 and older. Participants that attended more than one event also reported higher levels of satisfaction than those who attended just one event. Our program’s Net Promoter Score is 42 (# Promoters-# Detractors/(Total # Participants). This score is higher than average (based on industry benchmark of 35) and reflects a strong level of satisfaction and recommendation from participants to their friends/family. Additional findings and data are included in attachments.

- Details about the survey methodology including total sample size, number of completed responses, how the survey was administered, and dates the survey was active: Participants were surveyed at the time of registration to capture baseline demographics and metrics of identity and engagement as well as a follow-up survey sent within a week of participation. Follow-up surveys were administered online and included questions about intended outcomes as well as participant satisfaction. Surveys were administered on a rolling basis throughout the year and were accessible for 3 weeks from the initial invitation. A total of 763 participants responded to the surveys for a total response rate of 61%.

1 As of July 2023

2 Cf. Fornell, 1996; Castillo et al, 2018

3 Cf. Biesok and Wyród-Wróbel, 2021; Patti et al, 2020

4 A NPS score is calculated using the following formula: (# Promoters - # Detractors)/Total # Participants

5 CSAT vs NPS: Which Customer Satisfaction Metric Is Best? - Qualtrics